TL;DR: We are excited to launch the FlowerTune LLM Leaderboard ! 🚀 In this initiative, we provide a complete pipeline for the federated fine-tuning a pre-trained Mistral-7B across 4 tasks with model performance measured against a suitable baseline. Feel free to explore your own methods — tweak the hyperparameters, switch models, or try different FL algorithms — that perform this same fine-tuning process — only better! We invite you to submit your project, secure your spot on the leaderboard, and showcase your expertise in advancing the capabilities of federated LLM fine-tuning! Check all the details on our website: flower.ai/benchmarks/llm-leaderboard.

Why do this?

Large language models (LLMs) excel across various ML tasks but face challenges as the supply of high-quality public data dwindles, and the need for domain-specific knowledge in areas like health, law, and finance grows. Federated LLM fine-tuning addresses these issues by enabling collaborative model improvement without sharing raw data, thus protecting privacy and leveraging a wider range of sensitive datasets to create more personalized, specialized models.

The FlowerTune LLM Leaderboard initiative aims to establish baselines for various domains, promoting real-world, LLM-focused federated fine-tuning that everyone can follow. We encourage participants to explore their own methods to achieve better model performance. Your contributions are invaluable, and we hope that, through collective efforts, this initiative can make federated LLM fine-tuning more democratic, personalized, and specialized.

How to Participate

The Flower Team has compiled 4 challenges of federated LLM fine-tuning across different domains --- general NLP, finance, medical and code.

The first step to participate in the FlowerTune LLM Leaderboard is to select a challenge from the boards above. Next, on a new Python environment run the following commands to creat a new project:

# Install flower pip install flwr # Create a new project using the FlowerTune template flwr new --framework=FlowerTune

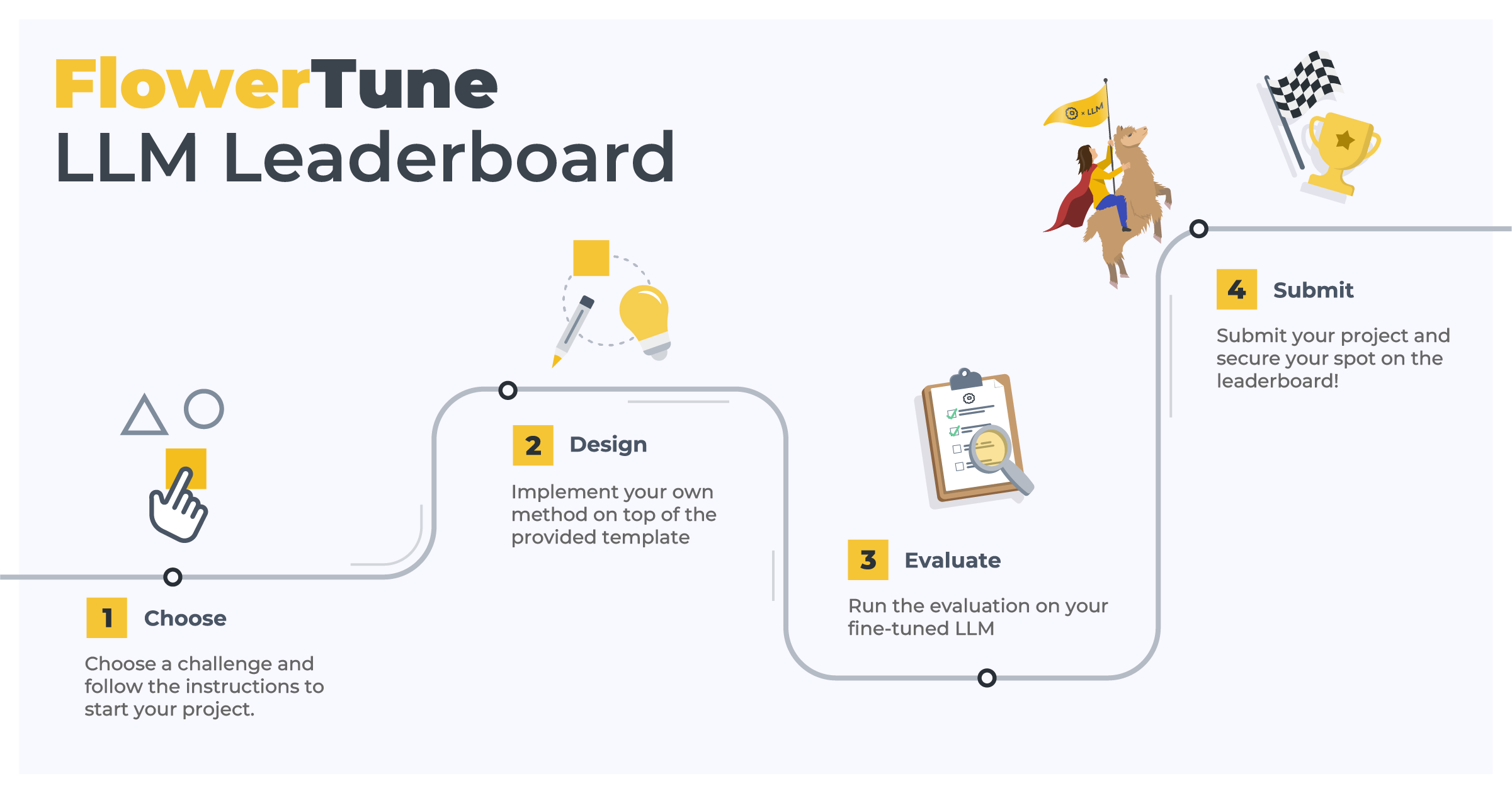

From there, it’s time to code! Build your own methods on top of the provided template. Each challenge has its own evaluation metrics, so run the evaluation on your fine-tuned LLM and aim for high performance. Finally, submit your project and secure your spot on the leaderboard!

Check out the instructional video below to guide you through the entire participation process:

Rules and FAQ

With the FlowerTune LLM Leaderboard, the Flower Team aims to evaluate LLM fine-tuning solutions under a realistic FL environment and so establish a fair competition. To achieve this, we have implemented real-world constraints, such as a 13 billion parameter limit for base models and a 200-gigabyte cap on overall communication costs. You can check these rules details and a FAQ we have compiled ahead of time in our website: flower.ai/benchmarks/llm-leaderboard.

If you need any clarifications about the rules, please feel free to make posts at our dedicated FlowerTune Category on Flower Discuss forum, or join our Slack channel to ask questions in the #flowertune-llm-leaderboard channel.

Alternative ways of Contributing

If you have an idea for a new challenge related to federated LLM fine-tuning and would like to add it to the FlowerTune LLM Leaderboard, please reach out to us via Slack. We greatly welcome this type of contribution!

Flower AI Summit 2024 Announcement

The FlowerTune LLM Leaderboard was first announced in the Flower AI Summit 2024. Go to our YouTube channel to watch this and other announcements as well as the talks and tutorials from the event.