Medical LLM Leaderboard

Embrace Federated LLM Fine-Tuning and Secure Your Spot on the Leaderboard!

| Rank | Team | Base Model | Comm. Costs | Average (↑) | PubMedQA | MedMCQA | MedQA | CareQA | Code | Date |

|---|---|---|---|---|---|---|---|---|---|---|

1 | ZeroOne.AI | Llama3.1-Aloe-Beta-8B | 2.0 GB | 63.57 | 74.80 | 55.39 | 59.31 | 64.79 | link | 14.01.26 |

2 | FL-finetune-JB-DC | Bio-Medical-Llama-3-8B | 1.6 GB | 63.12 | 70.40 | 60.93 | 65.82 | 55.36 | link | 12.04.25 |

3 | AI4EOSC Team | Bio-Medical-Llama-3-8B | 1.0 GB | 62.14 | 66.20 | 60.29 | 68.42 | 53.64 | link | 25.04.25 |

4 | Gachon Cognitive Computing Lab | Bio-Medical-Llama-3-8B | 2.0 GB | 62.12 | 65.80 | 60.38 | 68.57 | 53.76 | link | 19.03.25 |

5 | mHealth Lab | Bio-Medical-Llama-3-8B | 0.7 GB | 62.03 | 65.80 | 68.34 | 60.31 | 53.67 | link | 21.04.25 |

6 | ZJUDAI | Llama-3.1-8B-Instruct | 2.0 GB | 61.75 | 59.94 | 69.40 | 59.94 | 57.74 | link | 04.04.25 |

7 | mHealth Lab | Bio-Medical-Llama-3-8B | 2.9 GB | 61.46 | 69.60 | 59.12 | 63.94 | 53.18 | link | 21.04.25 |

8 | AI4EOSC Team | Bio-Medical-Llama-3-8B | 2.0 GB | 61.14 | 70.80 | 58.04 | 62.84 | 52.87 | link | 06.03.25 |

9 | FL-finetune-JB-DC | Bio-Medical-Llama-3-8B | 1.6 GB | 60.75 | 70.20 | 59.29 | 59.23 | 54.31 | link | 12.04.25 |

10 | ZJUDAI | Qwen2.5-7B-Instruct | 1.5 GB | 60.64 | 65.15 | 56.80 | 65.15 | 55.46 | link | 04.04.25 |

11 | Gachon Cognitive Computing Lab | Bio-Medical-Llama-3-8B | 2.0 GB | 60.37 | 70.60 | 57.68 | 61.50 | 51.68 | link | 21.11.24 |

12 | ZJUDAI | Mistral-7B-Instruct-v0.3 | 2.0 GB | 53.45 | 54.40 | 55.20 | 54.40 | 49.80 | link | 04.04.25 |

13 | Massimo R. Scamarcia | Qwen2.5-7B-Instruct | 45.1 GB | 49.07 | 44.60 | 39.06 | 55.46 | 57.16 | link | 23.11.24 |

14 | Abdelkareem Elkhateb | Qwen2.5-1.5B-Instruct | 11.0 GB | 46.00 | 52.60 | 40.81 | 40.22 | 50.38 | link | 02.03.25 |

15 | Massimo R. Scamarcia | Mistral-7B-Instruct-v0.3 | 45.1 GB | 40.95 | 63.80 | 25.60 | 39.83 | 34.57 | link | 23.11.24 |

16 | mHealth Lab | JSL-MedLlama-3-8B-v2.0 | 0.3 GB | 39.30 | 58.60 | 27.50 | 30.30 | 40.80 | link | 21.04.25 |

17 | mHealth Lab | Llama-medx_v3.2 | 18.6 GB | 39.04 | 39.40 | 36.34 | 41.56 | 38.87 | link | 21.04.25 |

18 | Baseline | Mistral-7B-v0.3 | 40.7 GB | 32.82 | 59.00 | 23.69 | 27.10 | 21.47 | link | 01.10.24 |

19 | mHealth Lab | meerkat-7b-v1.0 | 18.5 GB | 22.30 | 36.00 | 13.67 | 25.77 | 13.76 | link | 21.04.25 |

20 | Massimo R. Scamarcia | Qwen3-0.6B | 8.1 GB | 21.94 | 20.40 | 20.13 | 20.35 | 26.86 | link | 04.05.25 |

21 | T-IoI@UNR | SmolLM2-135M-Instruct | 1.4 GB | 17.02 | 54.20 | 6.93 | 0.09 | 6.86 | link | 14.04.25 |

22 | T-IoI@UNR | SmolLM2-135M | 1.4 GB | 11.29 | 7.20 | 16.80 | 2.67 | 18.51 | link | 14.04.25 |

23 | T-IoI@UNR | SmolLM2-360M-Instruct | 2.5 GB | 9.37 | 22.00 | 7.14 | 1.33 | 7.04 | link | 14.04.25 |

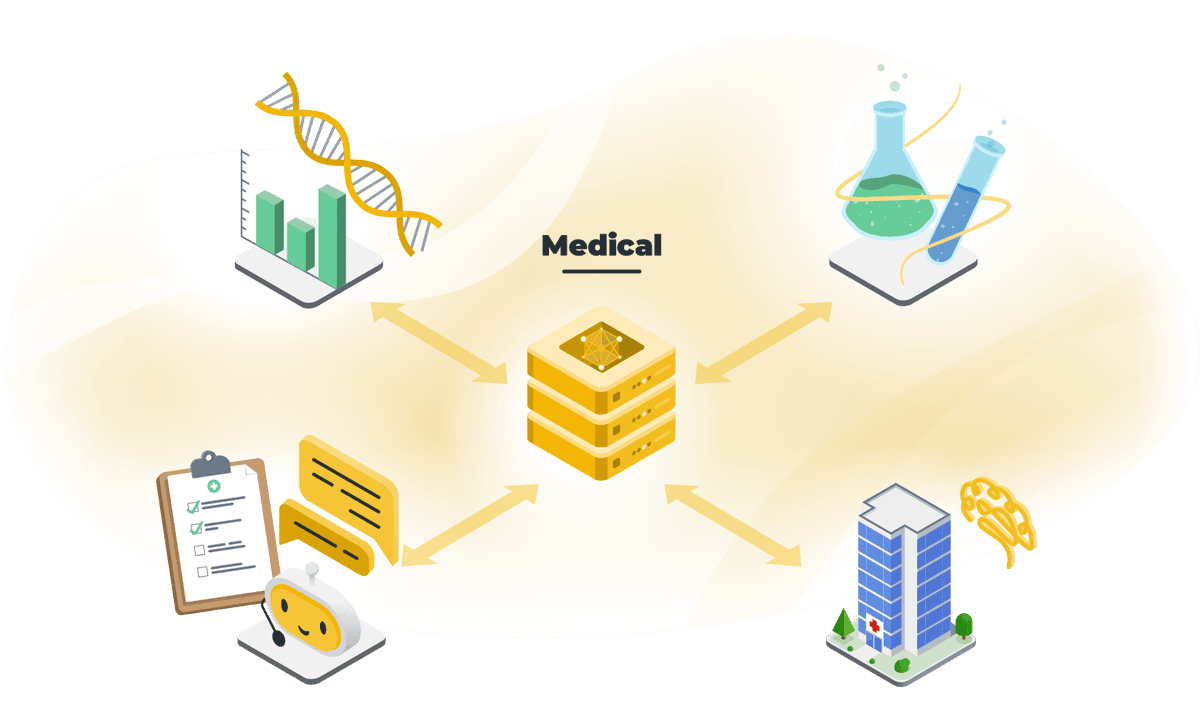

In healthcare, the accuracy of information extraction and decision support systems can significantly impact patient outcomes. Federated LLM fine-tuning on medical tasks addresses the critical need for models that are deeply familiar with medical terminologies, patient data, and clinical practices. By leveraging federated learning, hospitals and research institutions can collaboratively train a common model while maintaining the privacy of sensitive patient records. This method not only improves the model's capabilities in understanding and assisting with complex medical contexts but also ensures compliance with health data regulations like HIPAA, enhancing trust and adoption of AI in medicine.