Flower AI Summit 2026·April 15–16·London

NLP LLM Leaderboard

Embrace Federated LLM Fine-Tuning and Secure Your Spot on the Leaderboard!

← Scroll →

| Rank | Team | Base Model | Comm. Costs | Average (↑) | STEM | Social Sciences | Humanities | Code | Date |

|---|---|---|---|---|---|---|---|---|---|

1 | ZeroOne.AI | Internlm3-8b-instruct | 2.9 GB | 69.25 | 66.13 | 80.76 | 60.87 | link | 14.01.26 |

2 | Gachon Cognitive Computing Lab | Internlm3-8b-instruct | 2.9 GB | 69.19 | 66.22 | 80.56 | 60.80 | link | 19.03.25 |

3 | T-IoI@UNR | Gemma2-9b-cpt-sahabatai-v1-instruct | 0.7 GB | 67.78 | 59.75 | 81.11 | 62.48 | link | 09.03.25 |

4 | FL-finetune-JB-DC | Qwen2.5-7B-Instruct | 1.5 GB | 67.71 | 63.11 | 79.29 | 60.74 | link | 12.04.25 |

5 | FL-finetune-JB-DC | Qwen2.5-7B-Instruct | 1.5 GB | 67.18 | 62.67 | 78.94 | 59.93 | link | 12.04.25 |

6 | Gachon Cognitive Computing Lab | Gemma2-9B-instruct | 0.7 GB | 64.84 | 54.33 | 79.92 | 60.28 | link | 10.12.24 |

7 | ZJUDAI | Qwen2.5-7B-Instruct | 1.5 GB | 64.04 | 52.52 | 79.27 | 60.32 | link | 04.04.25 |

8 | Massimo R. Scamarcia | Phi-4 | 44.7 GB | 55.64 | 40.66 | 74.52 | 51.75 | link | 12.01.25 |

9 | ZJUDAI | Qwen2.5-1.5B-Instruct | 0.7 GB | 53.32 | 47.13 | 62.30 | 50.54 | link | 04.04.25 |

10 | Alessandro Pinto | Qwen2.5-1.5B-Instruct | 2.1 GB | 52.77 | 44.49 | 63.89 | 49.92 | link | 14.03.25 |

11 | ZJUDAI | Mistral-7B-Instruct-v0.3 | 2.0 GB | 43.05 | 29.94 | 54.27 | 44.93 | link | 04.04.25 |

12 | ZJUDAI | Llama-3.1-8B-Instruct | 2.0 GB | 31.49 | 22.87 | 39.55 | 32.05 | link | 04.04.25 |

13 | Baseline | Llama-3.2-3B | 27.4 GB | 21.68 | 22.20 | 25.32 | 17.53 | link | 09.12.24 |

14 | T-IoI@UNR | SmolLM2-135M-Instruct | 0.7 GB | 21.10 | 18.68 | 21.90 | 22.74 | link | 14.04.25 |

15 | ZJUDAI | TinyLlama-1.1B-Chat-v1.0 | 0.7 GB | 19.23 | 14.18 | 21.61 | 21.91 | link | 04.04.25 |

16 | T-IoI@UNR | SmolLM2-360M-Instruct | 1.3 GB | 17.18 | 19.44 | 19.43 | 12.68 | link | 14.04.25 |

17 | Baseline | Mistral-7B-v0.3 | 40.7 GB | 12.82 | 12.37 | 13.49 | 12.60 | link | 01.10.24 |

18 | ZJUDAI | Llama-3.2-1B-Instruct | 0.5 GB | 12.22 | 12.88 | 17.61 | 6.16 | link | 04.04.25 |

19 | T-IoI@UNR | SmolLM2-135M | 0.7 GB | 2.84 | 2.94 | 3.31 | 2.29 | link | 14.04.25 |

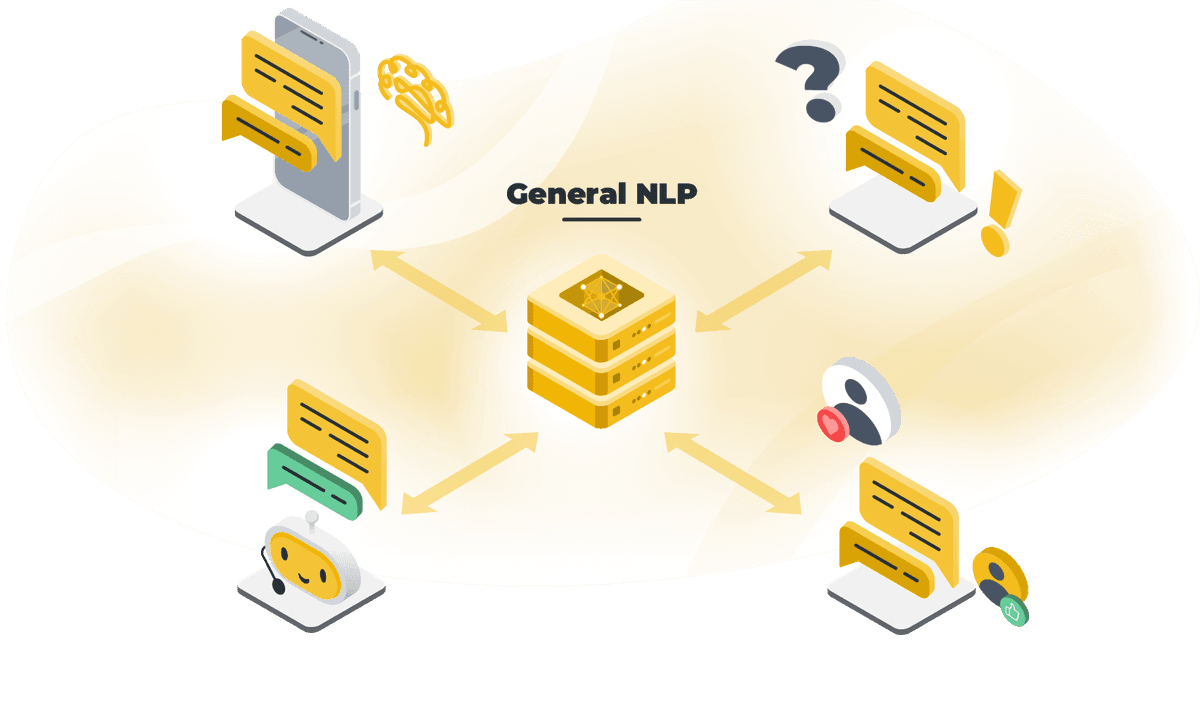

In the realm of Natural Language Processing (NLP), developing models that can effectively understand and generate human language is foundational. Federated LLM fine-tuning of models trained on general NLP tasks is vital as it democratizes LLM training across a diverse set of downstream tasks while preserving data privacy. This approach enable that the fine-tuned language models are not only robust and generalizable across various linguistic contexts but also attuned to nuances and colloquialisms present in different datasets.