Flower Datasets¶

Flower Datasets (flwr-datasets) is a library that enables the quick and easy creation of datasets for federated learning/analytics/evaluation. It enables heterogeneity (non-iidness) simulation and division of datasets with the preexisting notion of IDs. The library was created by the Flower Labs team that also created Flower : A Friendly Federated AI Framework.

Try out an interactive demo to generate code and visualize heterogeneous divisions at the bottom of this page.

Flower Datasets Framework¶

Install¶

python -m pip install "flwr-datasets[vision]"

Check out all the details on how to install Flower Datasets in Installation.

Tutorials¶

A learning-oriented series of tutorials is the best place to start.

How-to guides¶

Problem-oriented how-to guides show step-by-step how to achieve a specific goal.

References¶

Information-oriented API reference and other reference material.

References

Reference docs

Contributor tutorials

Main features¶

Flower Datasets library supports:

Downloading datasets - choose the dataset from Hugging Face’s

dataset(link)(*)Partitioning datasets - choose one of the implemented partitioning schemes or create your own.

Creating centralized datasets - leave parts of the dataset unpartitioned (e.g. for centralized evaluation)

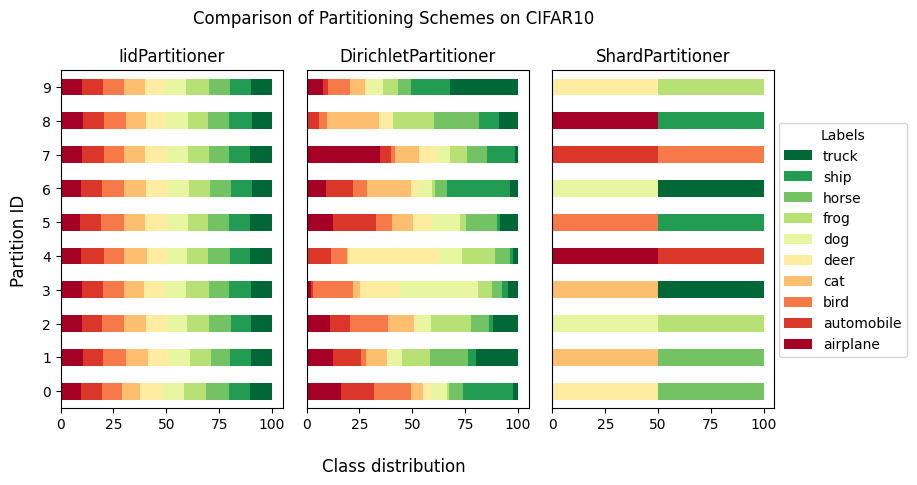

Visualization of the partitioned datasets - visualize the label distribution of the partitioned dataset (and compare the results on different parameters of the same partitioning schemes, different datasets, different partitioning schemes, or any mix of them)

Note

(*) Once the dataset is available on HuggingFace Hub, it can be immediately used in Flower Datasets without requiring approval from the Flower team or the need for custom code.

Thanks to using Hugging Face’s datasets used under the hood, Flower Datasets integrates with the following popular formats/frameworks:

Hugging Face

PyTorch

TensorFlow

Numpy

Pandas

Jax

Arrow

Here are a few of the Partitioners that are available: (for a full list see link )

Partitioner (the abstract base class)

PartitionerIID partitioning

IidPartitioner(num_partitions)Dirichlet partitioning

DirichletPartitioner(num_partitions, partition_by, alpha)Distribution partitioning

DistributionPartitioner(distribution_array, num_partitions, num_unique_labels_per_partition, partition_by, preassigned_num_samples_per_label, rescale)InnerDirichlet partitioning

InnerDirichletPartitioner(partition_sizes, partition_by, alpha)PathologicalPartitioner

PathologicalPartitioner(num_partitions, partition_by, num_classes_per_partition, class_assignment_mode)Natural ID partitioner

NaturalIdPartitioner(partition_by)Size partitioner (the abstract base class for the partitioners dictating the division based the number of samples)

SizePartitionerLinear partitioner

LinearPartitioner(num_partitions)Square partitioner

SquarePartitioner(num_partitions)Exponential partitioner

ExponentialPartitioner(num_partitions)more to come in the future releases (contributions are welcome).

How To Use the library¶

Learn how to use the flwr-datasets library from the Quickstart examples .

Distinguishing Features¶

What makes Flower Datasets stand out from other libraries?

Access to the largest online repository of datasets:

The library functionality is independent of the dataset, so you can use any dataset available on 🤗Hugging Face Datasets. This means that others can immediately benefit from the dataset you added.

Out-of-the-box reproducibility across different projects.

Access to naturally dividable datasets (with some notion of id) and datasets typically used in centralized ML that need partitioning.

Customizable levels of dataset heterogeneity:

Each

Partitionertakes arguments that allow you to customize the partitioning scheme to your needs.Partitioning can also be applied to the dataset with naturally available division.

Flexible and open for extensions API.

New custom partitioning schemes (

Partitionersubclasses) integrated with the whole ecosystem.

Join the Flower Community¶

The Flower Community is growing quickly - we’re a friendly group of researchers, engineers, students, professionals, academics, and other enthusiasts.

Recommended FL Datasets¶

Below we present a list of recommended datasets for federated learning research, which can be

used with Flower Datasets flwr-datasets.

Note

All datasets from HuggingFace Hub can be used with our library. This page presents just a set of datasets we collected that you might find useful.

For more information about any dataset, visit its page by clicking the dataset name.

Image Datasets¶

Name |

Size |

Image Shape |

|---|---|---|

train 60k; test 10k |

28x28 |

|

train 50k; test 10k |

32x32x3 |

|

train 50k; test 10k |

32x32x3 |

|

train 60k; test 10k |

28x28 |

|

train 814k |

28x28 |

|

train 100k; valid 10k |

64x64x3 |

|

train 7.3k; test 2k |

16x16 |

|

train 10k |

227x227 |

|

train 90k; valid 90k; test 90k |

32x32x3 |

|

train 8.7k |

varies |

|

train 15.6k |

varies |

|

train 18.6k; test 4.7k |

varies |

|

train 73.3k; test 26k; extra 531k |

32x32x3 |

|

train 2.1k; test 0.9k |

varies |

|

train 59k; test 9k |

32x32 |

Audio Datasets¶

Name |

Size |

Subset |

|---|---|---|

train 64.7k |

v0.01 |

|

train 105.8k |

v0.02 |

|

train 70.3k |

||

varies |

14 versions |

|

varies |

clean/other |

Tabular Datasets¶

Name |

Size |

|---|---|

train 32.6k |

|

train 8.1k |

|

train 150 |

|

train 12.9k; test 3.2k |

|

train 350k; test 87.5k |

Text Datasets¶

Name |

Size |

Category |

|---|---|---|

train 1.6M; test 0.5k |

Sentiment |

|

full 974; sanitized 427 |

General |

|

test 164 |

General |

|

varies |

General |

|

train 4.8k |

Financial |

|

train 0.9k; validation 0.1k; test 0.2k |

Financial |

|

train 9.5k; validation 2.4k |

Financial |

|

train 2M; validation 11k |

Medical |

|

train 183k; validation 4.3k; test 6.2k |

Medical |

|

train 10.1k; test 1.3k; validation 1.3k |

Medical |

Multimodal Datasets¶

Name |

Size |

Modalities |

Category |

|---|---|---|---|

36k samples |

Spectrogram images + time-series KPIs |

Wireless jamming classification |