Announcing Flower 1.26.1

The Flower Team is excited to announce the release of Flower 1.26.1 stable 🎉

Announcing Flower 1.26.1

The Flower Team is excited to announce the release of Flower 1.26.1 stable 🎉

Announcing Flower Datasets 0.6.0

The Flower Team is excited to announce the release of Flower Datasets 0.6.0 🎉

Announcing Flower 1.26

The Flower Team is excited to announce the release of Flower 1.26 stable 🎉

Flower Labs and Starcloud Reach a Major AI Milestone in Orbit

Flower Labs and Starcloud successfully ran a decentralized AI workload on an operational satellite.

Announcing Flower 1.25

The Flower Team is excited to announce the release of Flower 1.25 stable 🎉

Enterprise-Grade Federated AI: Flower Architectural Patterns

Learn how Flower delivers full enterprise-grade federated AI for secure and regulated environments.

Announcing Flower 1.24

The Flower Team is excited to announce the release of Flower 1.24 stable 🎉

Quantum Federated Learning using Flower

Bridging Quantum Computing and Federated Learning with PennyLane and Flower

Announcing Flower's Collaboration with Red Hat

Flower Labs and Red Hat join forces to advance scalable Federated AI on scientific computing platforms

Announcing Flower 1.23

The Flower Team is excited to announce the release of Flower 1.23 stable 🎉

Announcing the Decentralized AI Hackathon Winners

The winning teams at the Decentralized AI Hackathon tell us what they built!

Scaling Federated AI for the NHS in Secure Research Environments

Discover how Flower's integration into Secure Research Environments is revolutionizing federated AI for the NHS.

Announcing Flower SuperGrid

SuperGrid, the next generation platform for scalable and production-grade federated AI.

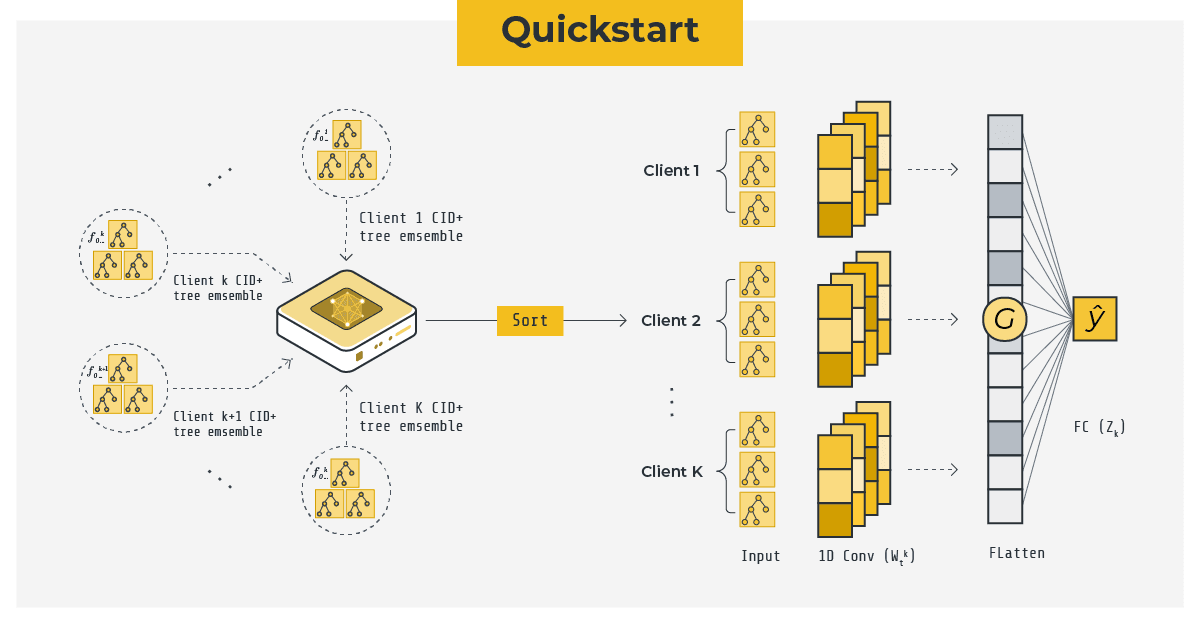

Quickstart for the Decentralized AI Hackathon

🔥 Just two days left until the Decentralized AI Hackathon at Stanford!

Announcing Flower 1.22

The Flower Team is excited to announce the release of Flower 1.22 stable 🎉

Announcing Flower 1.21

The Flower Team is excited to announce the release of Flower 1.21 stable 🎉

Announcing Flower 1.20

The Flower Team is excited to announce the release of Flower 1.20 stable 🎉

Announcing Flower 1.19

The Flower Team is excited to announce the release of Flower 1.19 stable 🎉

Introducing the Flower Intelligence Kotlin SDK

Build privacy-first AI apps on Android using the new Kotlin SDK for Flower Intelligence

Photon: Federated Pre-training of Large Language Models

A New SOTA in Efficient and Robust Federated LLM Pre-training

Launching Flower Pilot Program Batch 3

We are launching the call for the next wave of collaborations in the Flower Pilot Program.

Announcing Flower 1.18

The Flower Team is excited to announce the release of Flower 1.18 stable 🎉

Decoupled Embeddings for Pre-training (DEPT)

New breakthrough in embeddings for decentralized AI

Announcing Flower 1.17

The Flower Team is excited to announce the release of Flower 1.17 stable 🎉

Flower Labs is now ISO 27001 compliant

Flower Labs is now ISO 27001 certified. We uphold the highest security standards to protect data and privacy. Learn how this benefits our community.

Announcing BloodCounts! and Flower Partnership

BloodCounts! and Flower Partner to Revolutionisze Early Diagnosis of Global Haematological Conditions

Using Vana and Flower Together

Building Decentralized, User-Owned AI with Vana and Flower

From Theory to Practice: Unlocking the Next Phase of Federated AI

What’s the next step in your journey towards federated AI mastery?

Flower Intelligence

On-device AI Privacy and Speed with Cloud-based AI Scale and Simplicity

Announcing Flower 1.16

The Flower Team is excited to announce the release of Flower 1.16 stable 🎉

Revolutionizing Healthcare with Federated AI

Eye2Gene’s Demo Showcase Using Flower.

Pioneering the Future of Medical Imaging with Federated Learning

Revolutionize medical imaging while safeguarding sensitive patient data using Flower and federated learning.

Announcing Flower 1.15.2

The Flower Team is excited to announce the release of Flower 1.15.2 stable 🎉

Announcing Flower 1.15.1

The Flower Team is excited to announce the release of Flower 1.15.1 stable 🎉

Announcing Flower 1.15

The Flower Team is excited to announce the release of Flower 1.15 stable 🎉

Announcing Flower 1.14

The Flower Team is excited to announce the release of Flower 1.14 stable 🎉

Announcing Flower Datasets 0.5.0

The Flower Team is excited to announce the release of Flower Datasets 0.5.0 🎉

Federated Datasets in Research

An overview of the datasets used in FL research.

Announcing Flower 1.13.1

The Flower Team is excited to announce the release of Flower 1.13.1 stable 🎉

Announcing Flower 1.13

The Flower Team is excited to announce the release of Flower 1.13 stable 🎉

Announcing Flower Datasets 0.4.0

The Flower Team is excited to announce the release of Flower Datasets 0.4.0 🎉

Announcing FlowerTune LLM Leaderboard

The Flower Team is excited to announce the FlowerTune LLM Leaderboard

Announcing Flower 1.12

The Flower Team is excited to announce the release of Flower 1.12 stable 🎉

Announcing Flower 1.11.1

The Flower Team is excited to announce the release of Flower 1.11.1 stable 🎉

Announcing Flower 1.11

The Flower Team is excited to announce the release of Flower 1.11 stable 🎉

Announcing Flower Datasets 0.3.0

The Flower Team is excited to announce the release of Flower Datasets 0.3.0 🎉

Announcing Flower 1.10

The Flower Team is excited to announce the release of Flower 1.10 stable 🎉

In the Jungle of Federated Learning Frameworks

A comparison of open-source FL frameworks, and new FL comparison suite.

Announcing Flower Datasets 0.2.0

The Flower Team is excited to announce the release of Flower Datasets 0.2.0 🎉

Announcing Flower Node Authentication

We are announcing the new Flower node authentication feature in Flower 1.9

Announcing Flower Docker images

We are excited to announce the release of our new Flower Docker images 🐳

Announcing Flower 1.9

The Flower Team is excited to announce the release of Flower 1.9 stable 🎉

Announcing Flower 1.8

The Flower Team is excited to announce the release of Flower 1.8 stable 🎉

Announcing NVIDIA and Flower Collaboration

NVIDIA and Flower Collaborate to Improve Federated Learning Development for Researchers, Data Scientists and AI Developers.

Launching Flower Pilot Program: Batch Two

We are launching the call for the next wave of participants in the Flower Pilot Program!

Announcing Flower Discuss

We are happy to announce the launch of our official community forum.

Introducing FlowerLLM

FlowerLLM: The World's first 1.3B parameter LLM trained via Federated Learning

LLM FlowerTune: Federated LLM Fine-tuning with Flower

Check out our new example for federated LLM fine-tuning

Flower中文API文档发布

Flower's Chinese API documentation is now available.

Flower Labs raises $20M Series A

Accelerate the democratization of decentralized and federated AI

Federated XGBoost: Flower is all you need

Check out our new features for federated XGBoost

Install Flower with Conda

Flower is now officially distributed on the conda-forge!

Announcing Flower 1.7

The Flower Team is excited to announce the release of Flower 1.7 stable 🎉

Federated Learning with MLX and Flower

MLX is a NumPy-like array framework designed for efficient and flexible machine learning on Apple silicon.

Raspberry Pi 5: Ready for Federated Vision

We benchmarked the new Raspberry Pi 5 against its predecessor on a wide range of new and old vision models.

Federated XGBoost with bagging aggregation

Check out our new examples and docs for federated XGBoost with bagging aggregation

Announcing Flower 1.6

The Flower Team is excited to announce the release of Flower 1.6 stable 🎉

Announcing Flower Datasets

Reliable, thoroughly tested dataset partitioning schemes that create fully reproducible Federated Learning experiments are now possible with Flower Datasets.

Flower User Survey 2023

Are you a Flower user or active in the FL community? Please support us on our journey to improve our framework by completing this survey 🌼

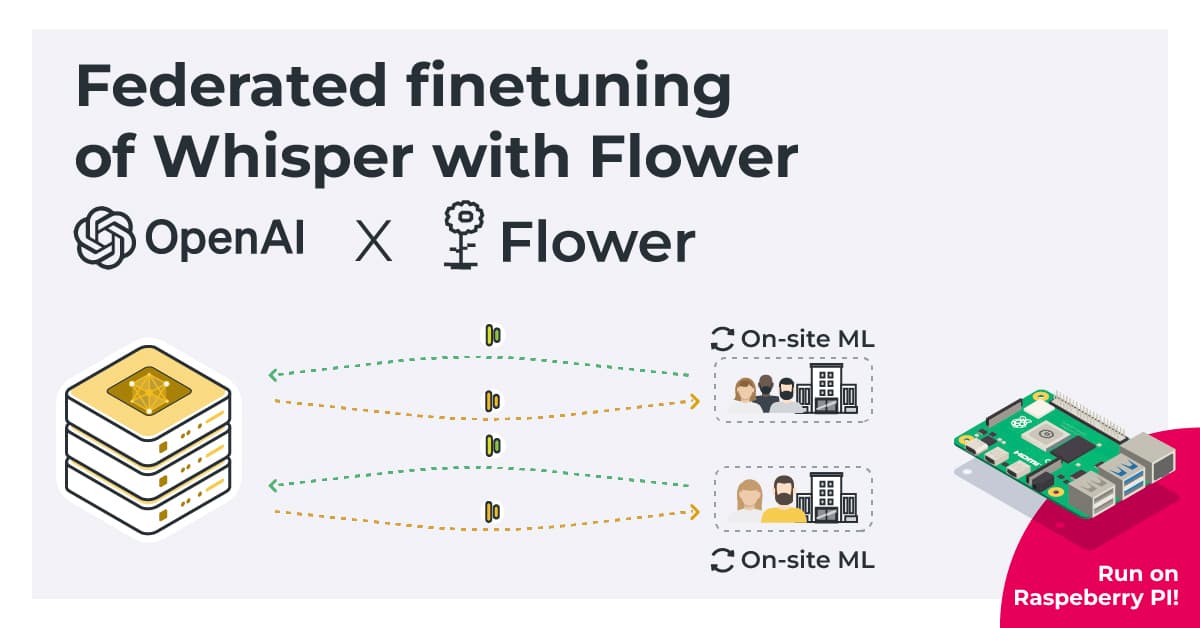

Federated Finetuning of OpenAI's Whisper

Check out the new code example federating OpenAI's Whisper for the downstream task of keyword spotting.

Announcing Flower 1.5

The Flower Team is excited to announce the release of Flower 1.5 stable 🎉

Federated Learning Standards

A collaborative approach to improving research reproducibility and building an interoperable future.

Announcing the Summer of Reproducibility

The Flower Team is excited to announce the Summer of Reproducibility 🏖️

Announcing Flower 1.4

The Flower Team is excited to announce the release of Flower 1.4 stable 🎉

Using XGBoost with Flower 🌳

Check out our new quickstart example leveraging XGBoost to federate learning in a horizontal setting!

Federated Learning with Self-Supervision

Yasar Abbas Ur Rehman explores combining Federated Learning (FL) & Self-Supervised Learning (SSL) for privacy-preserving, decentralized feature learning.

Announcing Flower Summit 2023

Every year, we bring together the Flower community to exchange ideas and plan for the future. We are excited to announce the Flower Summit 2023!

Announcing Flower Labs

An AI future that is collaborative, open and distributed.

Federated Learning with fastai and Flower

Fast.ai strives to 'make neural nets uncool again' by making it very simple. As you'll see, it's a great match with Flower!

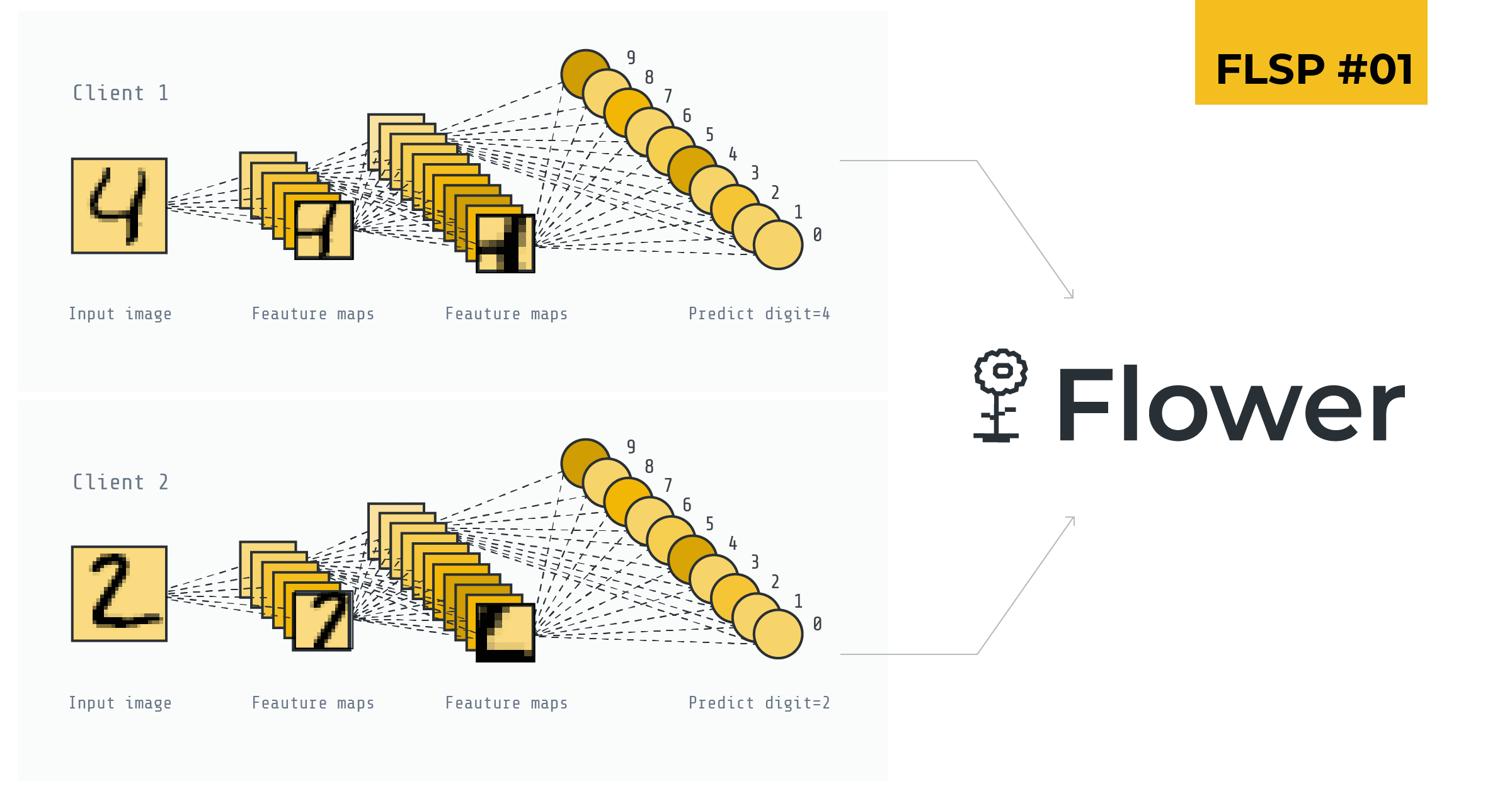

🎒 FL Starter Pack: FedProx on MNIST using a CNN

The MNIST experiment of the paper that demonstrated the FedProx framework is now implemented in baselines!

Announcing the Flower Next Pilot Program

Are you using federated learning to push AI forward? Join the pilot program, work with us to accelerate your project and develop improvements for the entire Flower community.

Announcing Flower 1.3

The Flower Team is excited to announce the release of Flower 1.3 stable

Monitoring Simulation in Flower

Learn how to monitor a simulation's resource consumption to make informed decisions on resource allocation.

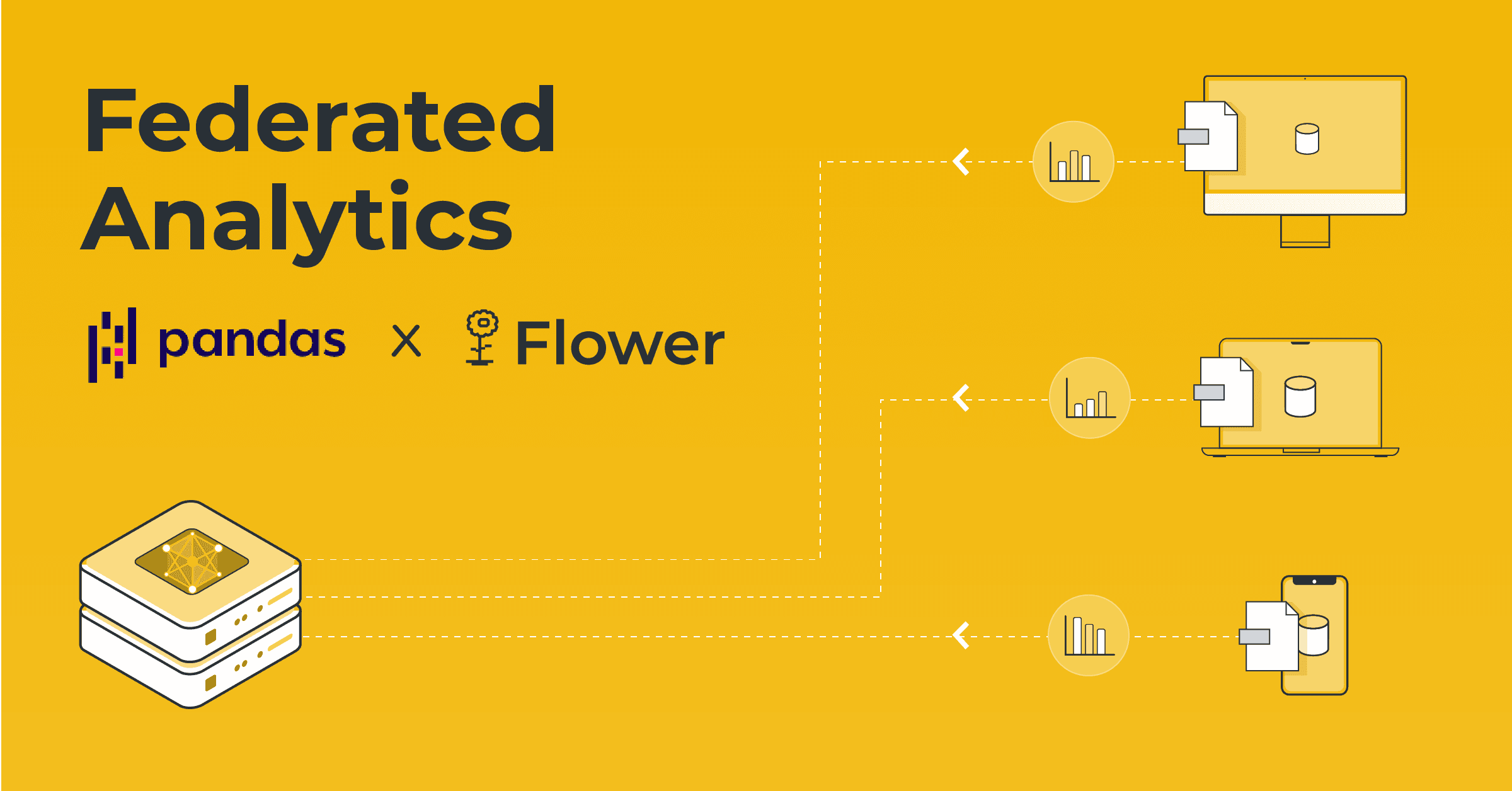

Federated Analytics with Flower and Pandas

Federated analytics is the practice of applying data science methods to the analysis of raw data distributed amongst a set of clients.

Announcing Flower 1.2

The Flower Team is excited to announce the release of Flower 1.2 stable

🎒 FL Starter Pack: FedAvg on MNIST using a CNN

New baseline! McMahan et al.'s pioneering FL paper finally added to baselines!

Announcing Flower 1.1

The Flower Team is excited to announce the release of Flower 1.1 stable

Private Ads with Brave & Flower: Call for volunteers in FL user study

We're collaborating with Brave to pioneer FL-based ad serving! Read on to find out how you can help.

Announcing Flower 1.0

The Flower Team is excited to announce the release of Flower 1.0 stable

Flower 0.19 Release

Flower 0.19 is available! Read on to find out what's new.

Flower´s new Design Language

It´s finally here: Flower´s new design language!

JAX meets Flower - Federated Learning with JAX

JAX is a high-performance machine learning framework build by Google researchers. It automatically differentiate and auto-optimizes a function and can now be easliy run federated.

Flower 0.18 Release

Flower 0.18 is finally available! Read on to find out what's new.

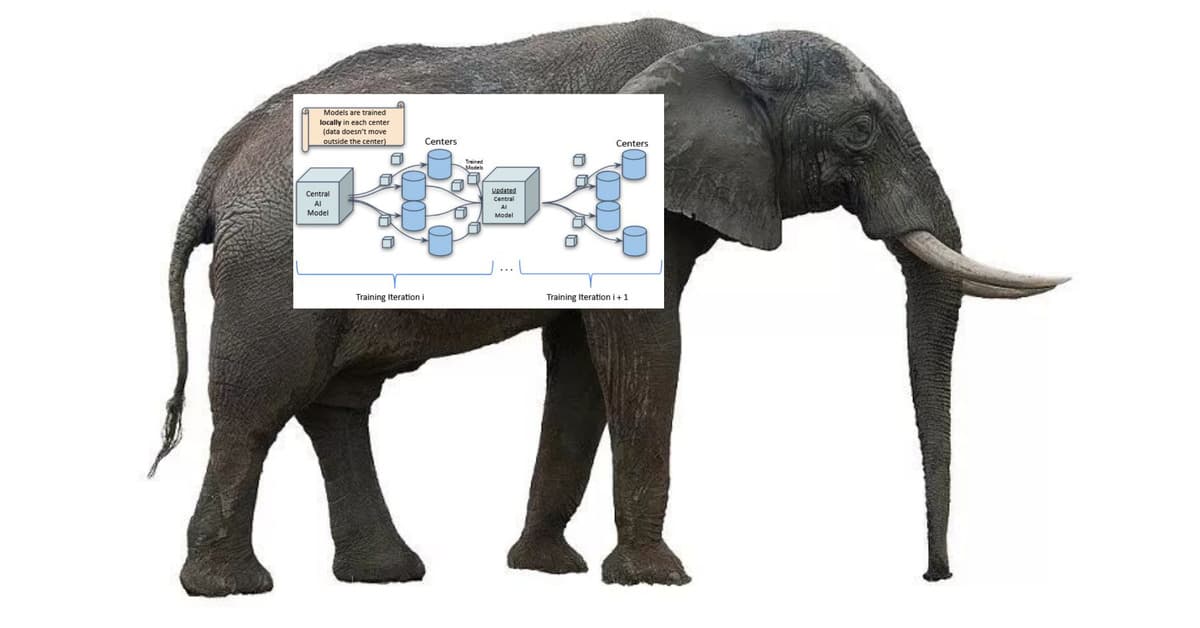

Federated Learning against Cancer in the Wild: How to Eat an Elephant

Setting up a federated network across clinical centers including clinical requirements to think about.

Federated Learning on Android devices with Flower

Federated Learning on Android devices with Flower and TFLite.

Speech models, federated! (SpeechBrain x Flower)

Federated Speech Model Training via SpeechBrain and Flower.

Federated Scikit-learn Using Flower

Scikit-learn models can now be trained on distributed data with Flower.

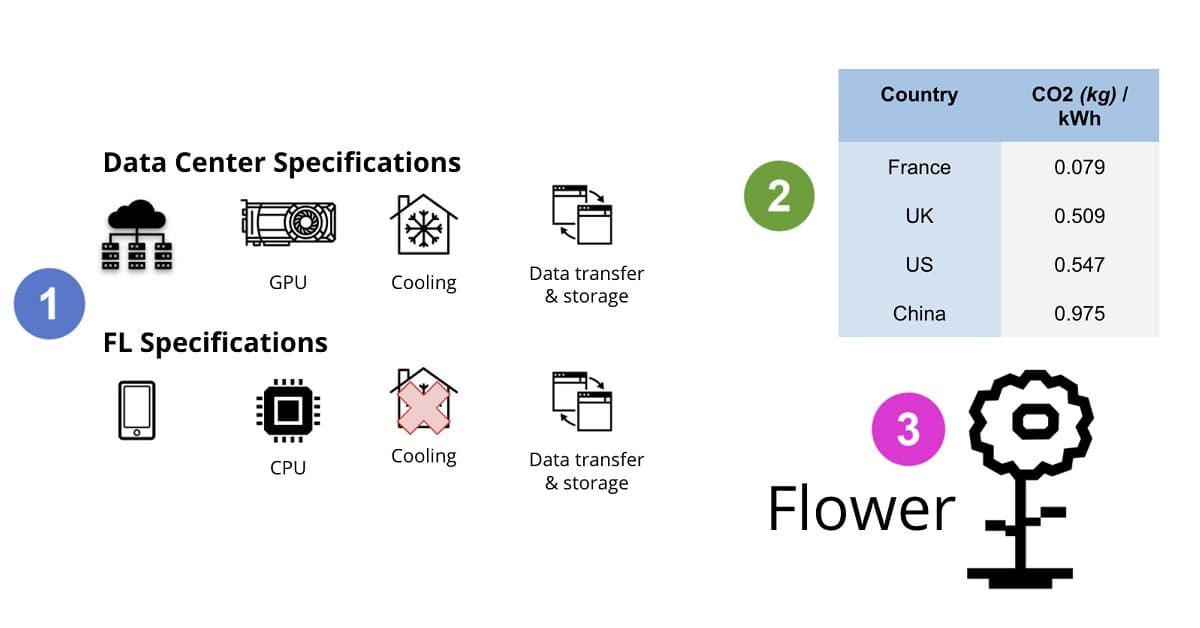

What is the Carbon Footprint of Federated Learning?

Comparing the carbon footprint of centralized and federated machine learning using Flower.

Running MXNet Federated - MXNet meets Flower

MXNet is a highly efficient and flexible machine learning framework. With Flower you can now build MXNet federated learning workloads for the very first time.

PyTorch: From Centralized To Federated

Federated your existing PyTorch machine learning projects with Flower.

Google Summer of Code: Project Ideas

List of Ideas for Google Summer of Code 2021

Single-Machine Simulation of Federated Learning Systems

How can we simulate a full Federated Learning system on a single machine?

Running Federated Learning applications on Embedded Devices

Federated Learning is catching traction and it is now being used in several commercial applications and services. Check how you can deploy FL applications on Embedded Devices.

Federated Learning in less than 20 lines of code

Can we build a fully-fledged Federated Learning system in less than 20 lines of code? Spoiler alert: yes, we can.

Hello, Flower Blog!

Today we are launching the Flower Blog!